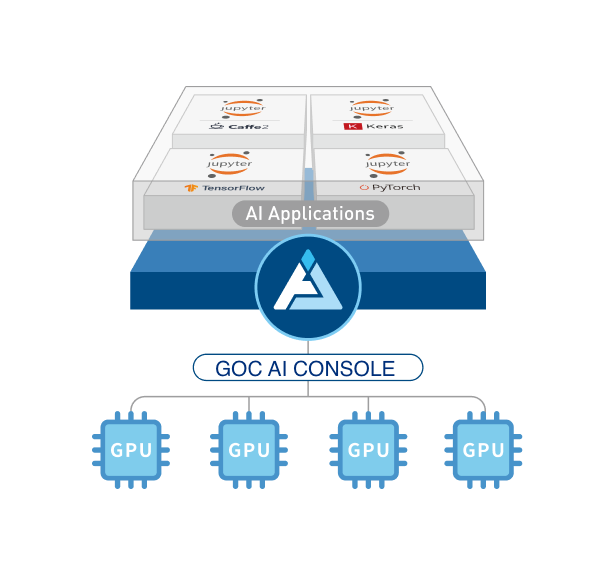

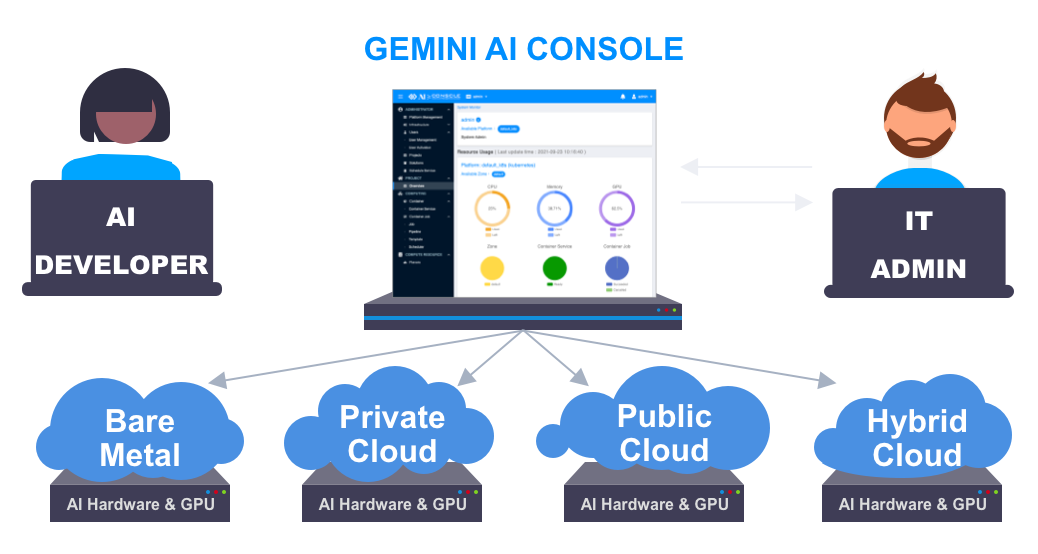

GOC AI Console

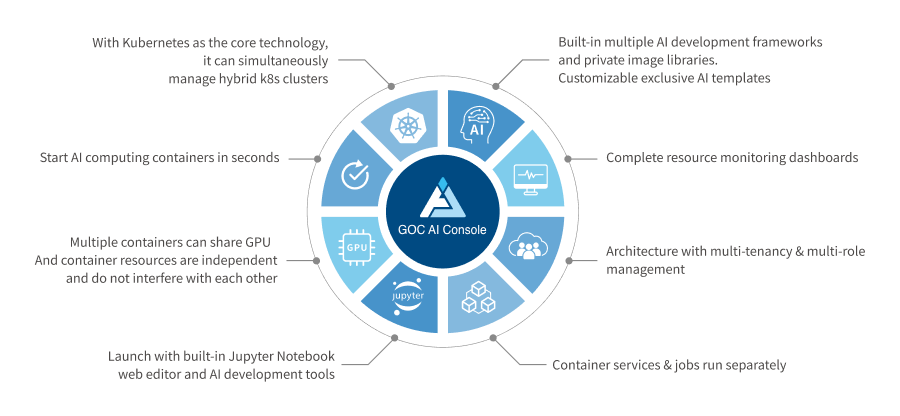

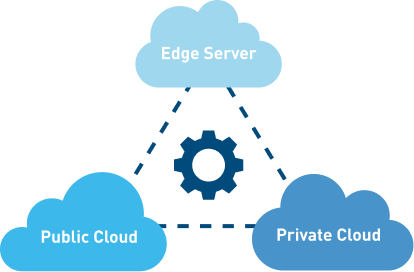

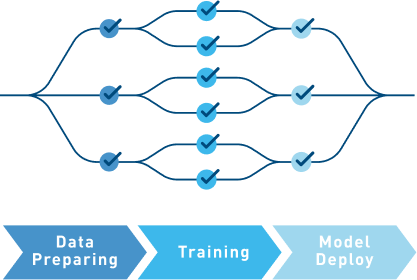

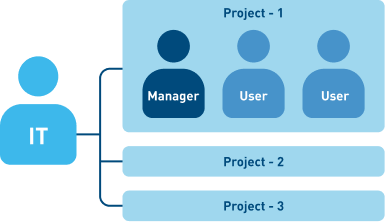

Through the GOC AI Console to build GPU management nodes that facilitates enterprise organizations to collaborate on cross AI projects. Make underlying construction infrastructure simplified, so that time and human resources can be focused on core algorithms to help companies to dig out better business opportunities from massive data more efficiently.

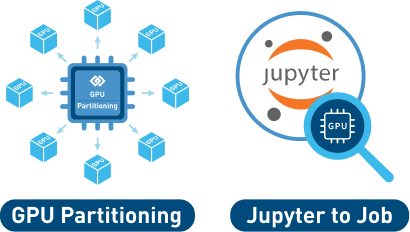

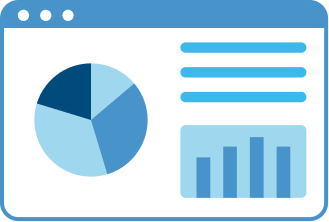

Data scientists and developers can quickly and easily open a cluster environment with a large amount of pre-loaded data and AI tools by GOC AI console portal. With unique GPU partitioning technology, the GPU utilization rate reaches its peak!