Institute of Nuclear Energy Research - AI Cloud

1. Case Summary

Institute of Nuclear Energy Research of Atomic Council of Executive Yuan (INER) actively import artificial intelligence/machine learning technology and develop various researches. The INER uses Gemini AI Console of Gemini Open Cloud to integrate and centrally manage multiple GPU workstations and disk arrays to create a GPU computing environment, allowing the research and development team of INER to focus more on creativity and produce more research and development.

This case requires a system with account management. Users can operate with a convenient Web interface to choose required computing resources by themselves flexiblility. A single computing process can choose from zero to multiple GPU cards for parallel computing. At the same time, it can integrate multiple AI frameworks and libraries with different versions (such as Tensorflow, Pytorch), allowing users to choose software and resources according to their needs.

Industry

Government

Region

Taiwan

Use Case

- Artificial intelligence/big data computing

- GPU, Kubernetes, Gemini AI Console

2. Pain Points and Challenges

- Lack of effective unified management for unified hosts

- Resources are often unevenly distributed or idle, and cannot be used by multiple users effectively.

- Need to be provided to different teams, therefore effective and safety management need to be provided.

Before INER import Gemini AI Console, there were several AI computing workstations with GPUs and disk array machines, but lacked of unified management of these machines. Therefore these three computing resources and storages cannot be allocated effectively. Some applications occupies most of the GPU resources without proper distributed. The resources are often idle or wasted, cannot be effectively allocated to users from different teams. Inefficient use of resources.

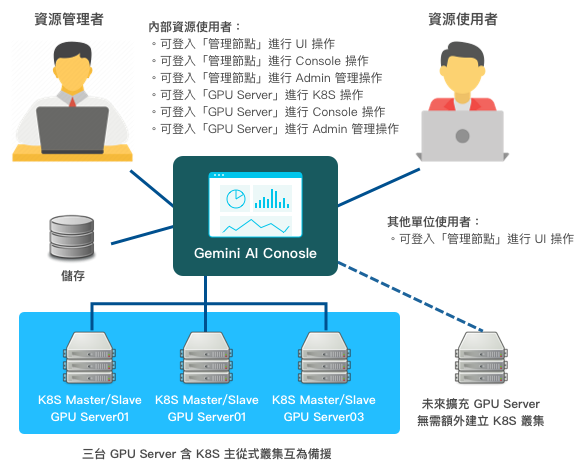

3. Architecture Design Features

- A single node can manage multiple GPU hosts.

- Provide an effective three-tier, multi-project management mechanism.

- Three GPU Servers are mutually redundant.

- If there will be expansion in the future, no need to create another K8S cluster.

Gemini Open Cloud completed a new plan after evaluating and diagnosing INER's original GPU computing environment. All configuration and installation are completed by Gemini Open Cloud, including network settings, operating system installation, virtualization software services, management system installation, resource monitoring, etc. Users only need a single window to solve all problems.

In order to achieve the best performance of the original GPU computing host, Gemini Open Cloud has planned an AI resource management node and installed the GPU cluster management software 'Gemini AI Console' to complete the functional requirements, including controlling allocation of GPU computing resources and account management, etc.

At the same time, Gemini Open Cloud sets INER's multiple GPU computing hosts to HA (High Availability) mode to avoid containers unusable caused by single computing host damage, so that affected containers can be automatically restarted on other computing hosts.

4. Result

- Computing resources are still effectively utilized even if set to HA mode.

- The use of resources is efficient, and there are additional resources available for other teams in INER.

- Newly purchased GPU Server next year, painlessly join the management system.

- Highly usage of IT investment also represents an increase production capacity of R&D achievements.

Before the import of Gemini AI Console, the three GPU computing hosts of INER could not be effectively managed, resulting in poor resource allocation, and the R&D team spent a lot of time waiting. After importing Gemini AI Console, even if the GPU host is set to HA mode, its resource utilization can still be maximized. These GPU computing resources are not only used by the original team, but under the effective management through Gemini AI Console, there are free resources that can be shared with other teams for use. Through effective control, the projects of different teams will not interfere with each other.

Due to the efficient use of resources, the internal use of INER is increasing, and the hardware resources of the GPU server have been expanded the following year. Under the architecture planned by Gemini Open Cloud, it painlessly joins the Gemini AI Console system, don't need to build another K8S cluster so that these resources can be used immediately.